Machine learning – hype or hope with 1 part snake oil, adding the snake oil makes all things including Machine Learning more capable than it may seem when peeking beneath the covers, or more slippery, but we will get into the slipperiness soon.

The following article is something that I have wanted to write for some time as I work with many Managed Service Providers across the technology Spectrum who have what I would term too much faith in the tools they are purchasing and not skilling up suitably in the human capability realm – not skilling the human capital adequately will cause issues for any Managed Service Provider or Security Services Provider in their future support of their clients.

As a passionate cyber security industry professional, I help business correctly identify the threats in cyber security as well as the many promises that can become over commitments within the industry. Over promising and too much reliance on the tools is a very real threat in 2022.

My hope for this article is that when reviewing Artificial intelligence in cyber security organisations and MSPs will look further into what their mix of Human capability vs Software cyber security tooling is and pay close attention to better training the human element.

TLDR

Machine learning in technology and Cyber security is useful, as an augmentation to what humans can do today. Put simply when you are looking for the “Needle in the haystack”, Machine learning will remove 80% of the chaff, the remaining 20% is where all the efforts, by human threat hunters or Security analysts will be concentrated.

ML in software tools do not make cyber security easier for clients and Managed Service Providers, nor do they remove the responsibility from the Managed Service Provider to be able to keep your clients secure.

On the Contrary – software tools in fact require the MSP or end client to perform more checks and detection reviews because now a tool being used, any “False negative” will immediately result in a director level Q / A “session” around why the cyber breach was not discovered when they are paying $5-12++ per month/user for a service.

Summary

- You need human cyber security professionals, directly, shared or in partnership with your MSP

- Machine learning is helpful but is not definitive; Teslas ML still drives into cars and people

- Cyber security is about layers of protection, consider the zero trust model

- Keep your historical log data, you may need it

- Reduce your attack surface whenever possible, attacks don’t work when stuff can not run

- Do not tell everyone what your security tools are, it makes you vulnerable

If you have some endurance and are still interested then please keep reading, otherwise I thank you for your time

Introduction to AI and ML

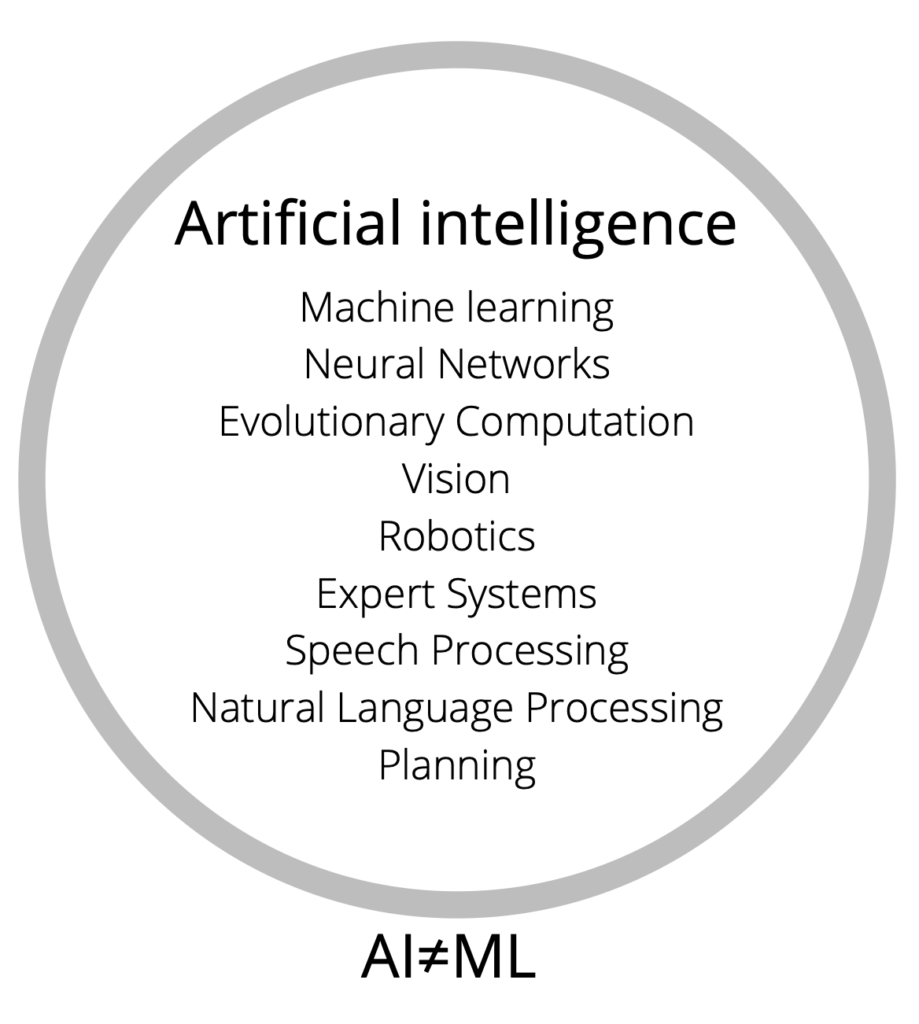

Machine learning (ML) and Artificial Intelligence (AI) have become intertwined and cross purposed as being the end of the human threat hunter or cyber security analyst for the past couple of years, but is this really the case? will humans be replaced by cyber security tools touting their advanced Artificial Intelligence capabilities really take over from us within the next 5-10 years? my answer is “NO!”, capitalisation is for certainty and the exclamation mark added for surety.

What is Artificial Intelligence and what is Machine Learning

In a layperson’s terms the difference between the two technologies is as follows:

Artificial Intelligence

Artificial intelligence in the fullest sense of the term means, given some initial state, would be able to learn as we do, a system would “live” and learn from the information provided to “it” or sensed by it.

Artificial Intelligence at the above level is so far removed from where technology is now it is basically still fantasy in 2022. Certainly there are big breakthroughs and at any time this could change but currently the computational capability is not available and even if it is, we do not know how to program something to be sentient and aware just yet.

Forbes has a basic article on the state of Artificial intelligence in general usage here if you would like to read further. In summary though, AI is useful for language processing and creating “prose” but as it does not have the capability or capacity today to deal with the seemingly infinite ways in which a human (or other animal) experiences their world.

This is what biological lifeforms do every day and it is at a level of complexity that is well beyond any current technology or any technology currently being evaluated in a Skunkworks laboratory somewhere (I can not back this statement up, so I am going to go out on a limb right now).

Machine learning

Machine learning is a subset of Artificial Intelligence, based on what humanity believes at this present time to make up the field of Artificial intelligence machine learning is one of the components that will bring about true Artificial intelligence sometime in the future.

Machine learning uses a set of data fed to it to determine an outcome, provide a Machine learning training model enough pictures of Dogs and it will pick out the dog in “most” images correctly, if the model has been trained on cats previously then a picture with both a cat and a dog will not be correctly identified, failing. Pretty good for finding cats in my photo roll but not necessarily finding all the cats I have!

Using ML, an example an image of a dog like the above should be correctly identified as a dog, even the breed should be correctly identified today, amazing to think how far we have come in ten years or less.

But the example above may cause a machine learning (ML) training model problems because of the lack of similar images or simply because the training model has not been “trained” to identify anything past one subject.

Fundamentally given enough data a Machine learning model can give you a result based on “what it knows”, not what it does not know – as in cyber breaches using new techniques would be the unknowns, or the “undetectables” – a great name for a new Film starring Tom Cruise using his regrown fame off the back of Top Gun 2!.

The undetectables can happen when the training data is biased, poor or insufficiently varied – the detection capability is going to be lacking in substance, or when there is a new APT using new attack methods. The more data into a Machine Learning training model, the more accurate the result. Therefore the difference in quality data can become a significant bottleneck to detection capabilities of software tools.

A real world example would be Tesla’s self driving capability which is constantly being caught out by unexpected situations in real world driving that a human would implicitly understand and adapt/avoid because we are not trained, we can make a determination on an immensely varied knowledge base that we have gained since our birth.

Despite this failing, the capability of self driving cars is simply amazing, but it shows the lack of real awareness in the Artificial Intelligence field today. AI is not capable of providing much of what the marketing around cyber security says that AI is able to perform.

How advanced is Cyber security AI and ML in 2022

The topic of Artificial intelligence is one that I am passionate about and how I came about this passion was working briefly with organisations like the University of Queensland, Cyber security faculty on what they are doing in this exciting and even growing field of study. If you are still with me then I shall explain the use of AI and ML in cyber security – and say a big thank you for sticking around so far.

The state of AI/ML in cyber security

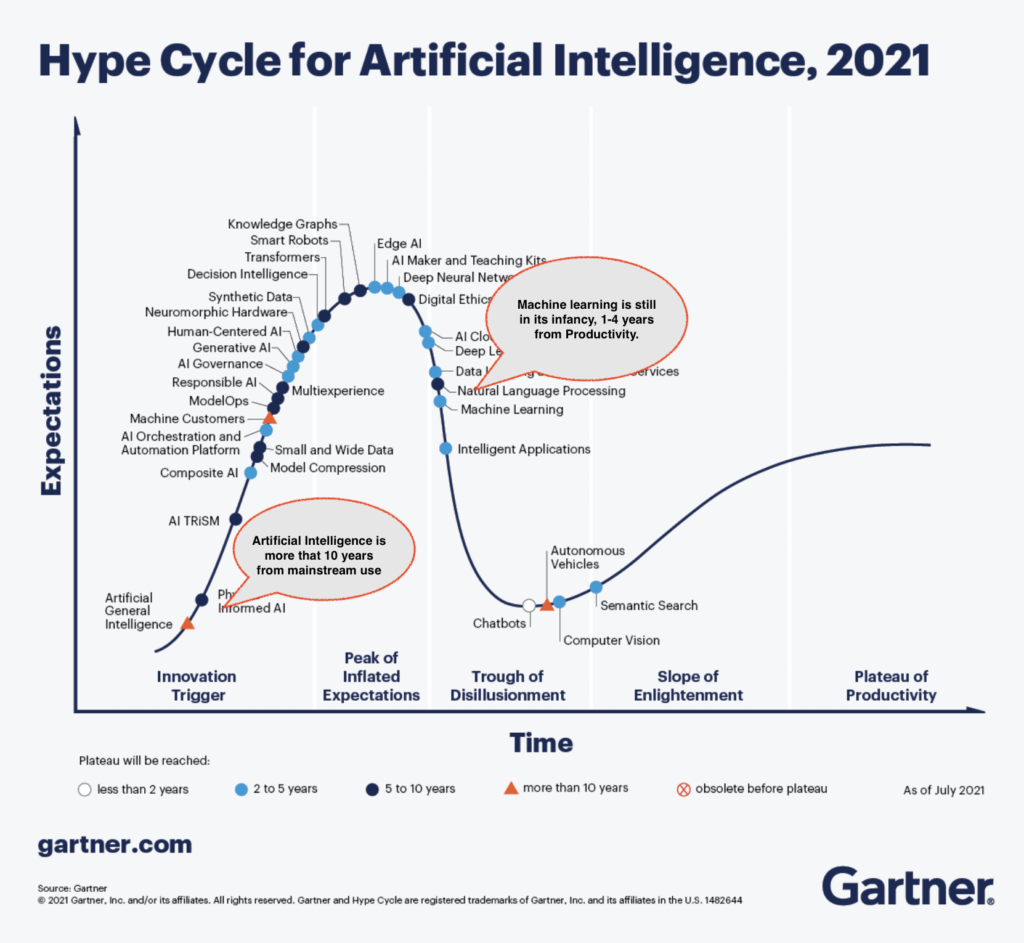

Gartner has a great category that they refer to as the “Hype cycle”, look out for the hype cycle with technologies that you are interested in as it provides an insight into where they are related to the market.

Today I present the Hype cycle for Artificial intelligence, below is a breakdown of the various AI related technologies as well as their progression towards real world usefulness or abandonment, some technologies will not survive the Winter of the “Trough of disillusionment” to come out the other side as a tech that industry adopts.

Link to the Gartner Artificial intelligence hype cycle here.

General Artificial intelligence as presented in the Gartner hype cycle is “at least” 10 years away from main stream, there are many roadblocks to overcome before AI will be anything more than an empty promise on every cyber security vendors marketing slides about advanced detections, Advanced detection is YOU the operator.

Applying AI and ML in cyber security

Having identified and discussed the differences between Artificial Intelligence and Machine learning both generally and usage in Cyber security, I want to explain how it is useful and also its limitations for cyber security use.

Previously I wrote about Endpoint detection and response failures here, this article demonstrates in real world cases why Machine learning and marketing does not equal cyber security and will not secure your organisation alone.

Mitre Evaluations

The Mitre attack Evaluations are a platform where 30 vendors pay to demonstrate their respective products efficacy to detect an Advanced Persistent threat from the previous year, or a lifetime ago in tech.

The Mitre Evaluations demonstrate clearly that machine learning models are not capable of detecting what they have not been “trained” to detect and that they are not really that useful at all yet except for the top three to four vendors.

What are the Mitre Evaluations, thirty vendors go up against a “known known”, something that cyber security professionals are aware of and “thwarted” last year, so how many of the vendors Machine learning training detects last years attack with an analytic detection – analytic being a ‘good’ detection as the tool provided an alert not just the raw data for a human to review.

Summary: No vendor picked up every signal, SentinelOne is closest with 108 from 109 subsets but the difference between best and worst vendor is massive.

Advanced AI and machine learning models should be capable of detecting these advanced threats from the previous year. Yet of the 30 vendors with their Detection and response platforms most were even less than 50% effective against last years Advanced Persistent Threat and only three to four would be considered a pass mark.

In 2022 most cyber security Endpoint Detection Response technologies could not detect a 2021 Advanced persistent threat sufficiently almost a year after it was used, never mind the AI capabilities which should in theory at least, augment the “known knowns” with data from the Machine learning models to detect this.

If a vendor has a lower than an 80% analytic detection rate against known and old APTs, then what may be the reason for this. Machine learning according to the marketing should help, but for ML to beat the latest APTs, the training models need to know about some part of the current APT and the vendor providing the solution must have updated their training models, failing against known APTs have not yet been trained to detect these, or at least well enough.

This is the difference between the promise of AI ML and the reality, a lot of Snake oil still around and the damage that this snake oil can create is for organisations using a tools first approach to blindly continue their business operations while a breach is in progress, falsely in the belief that their cyber security solution is protecting them.

Why Machine learning can fail

As a quick primer regarding machine learning, here is a description of Machine learning models

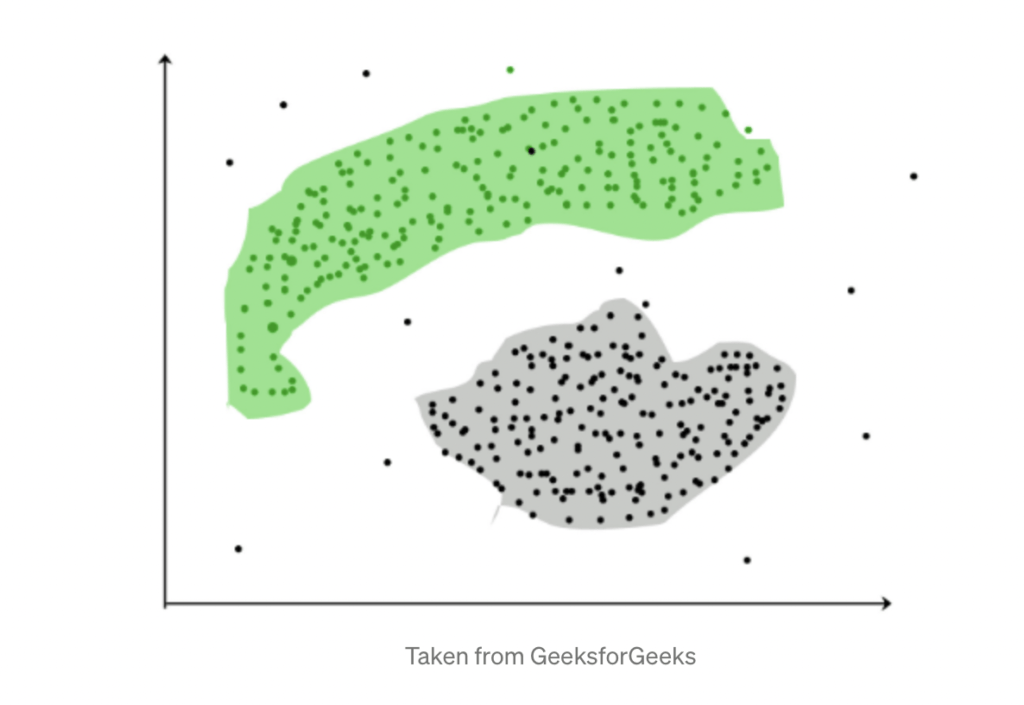

In cyber security we are looking mostly at what is referred to as Unsupervised learning in Machine learning and more specifically clustering, clustering is where various activities are correlated until some causation can be determined from the activities detected. As those in cyber security know the three possible outcomes are False Positives (causing alert fatigue), a correct detection, or a False Negative where a threat actor is creating their mayhem right under the nose of the organisations security team without being noticed.

In life and statistics Correlation does not equal causation, but that is a subject for another day….

Clustering is an unsupervised technique that involves the grouping, or clustering, of data points. It’s frequently used for customer segmentation, fraud detection, and document classification.

Terence Shin

How easy might it be to defeat clustering? If a cyber criminal does not know the capabilities of the organisation, the cyber security tools they are using then it is not trivial but it is quite possible.

If they do know what you use to protect and secure your business IT systems then you can be easily targeted with their knowledge of your detection solution.

Pro tip; as a client do not become a reference for any cyber security product, except word of mouth references.

An example

- A cyber criminal who does gain persistence on your corporate network is going to review the services running on the connected endpoints for security tools and will use their previous knowledge of the tools to stop these from running on start up as well as deleting the logs.

- Or they will create a VM on this system and use that as their springboard.

- Once this occurs then the endpoint infected is not reporting telemetry back to any security tools that are running and the lag time between these events and the determination that the endpoint is no longer protected is long enough for an encryption ransomware event to occur or for data exfiltration of selected documents.

Alternatively without the knowledge of the security tools in use within an organisation an experienced cyber criminal has time to both stagger the phases of their attacks as well as choose the tools that they leverage, because changing the way that an attack is occurring may work outside the bounds of what the security solution knows about and can therefore detect.

This is how zero day threats become breaches, cyber security detection solutions can not know something they have not been trained to detect.

Summary, ML – Hype, Hope or snake oil

Cyber security machine learning technology is not a replacement for human cyber security experts, nor should it be presented as such. Better yet Artificial intelligence does not equal Machine Learning, machine learning should be leveraged where it is most beneficial, that is too process vast swaths of data to reduce the telemetry data that a human operator needs to process.

This “vast swath” of process telemetry cyber security log data though should not be ignored and deleted and must also be stored within a SIEM for long term retrieval and analysis.

Machine Learning has much potential to help cyber security experts do what we are trained to do, ML will cut down the information that we need to process and help for APTs where techniques and tactics have been trained into the model.

Utilising machine learning will make our lives easier, but ML is only the hammer, humans are still have to decide how to hit the nail.

Solution

- You need cyber security professionals, either directly, shared or through your MSP

- Machine learning is helpful, it is not definitive; Teslas ML still drives into cars and people

- Cyber security is about layers of protection

- Reduce your attack surface

- Keep your historical log data, you may need it

- Do not tell everyone what you are doing to be secure, it makes you vulnerable

I leave you with these thoughts, Machine learning models are created by humans and have human biases because machine learning models are trained with “known knowns” not “unknown knowns” or “unknown unknowns” paraphrasing a Dick Cheney quote from a long time ago.

Cyber criminals are human, and humans do things that are not expected, therefore to combat human adversaries, cyber security must also remain a human domain in the mid term (5-10 years).

“Ultimately, machine-learning data sets and tools are built by people, and people have blind spots. It’s pretty typical in AI/ML for those blind spots to make inconvenient appearances—often in the results of AI/ML experiments. Care needs to be exercised at every step in the process to check for the introduction of biases and to excise them.Contributing makes me feel like I’m being useful to the planet.”

— Arstechnica

Disclaimer, I am a cyber security professional, I speak with clients every day about topics and issues within cyber security and the broader cyber security in general. I am not an expert in Artificial Intelligence or in Machine Learning and always appreciate comments and emails in regards to what I have written.

Please leave us a comment or reach out through the form here if you would like to get in touch.

Leave a Reply