Generative AI – real and what is not? how to tell

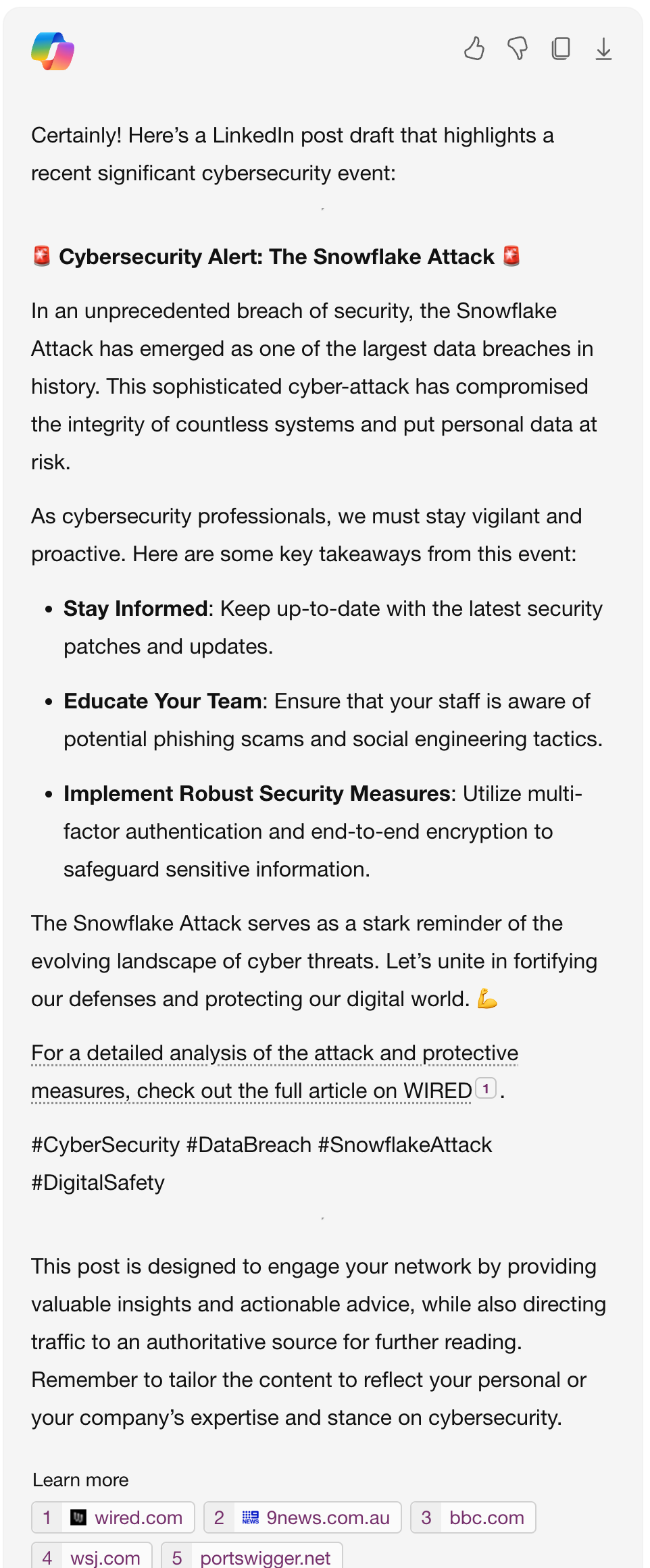

Ask a Large Language Model like Bing Chat Enterprise (the version that protects what I ask the LLM from being sent to the World Wide Web) to create a Cyber Security news story that will garner clicks from LinkedIn connections and the following was the outcome.

It is fair that my prompt was not detailed enough to maybe eliminate “fake news” so the above was what Bing Chat supplied me as an article that will generate attention!

Certainly the content is going to cause questions to be asked, because the content is completely Trump’ed! in that it is Fake News, Snowflake did not suffer a breach at all.

I write this to highlight the danger that trusting Generative AI and Large Language Models creates, there are several countries now using LLM’s to create content for news, and there have already been several incidents where the news content generated was not real, the event never happened – checking depends on a human and this checking can often be insufficient because humans are not always focused on reviewing and if the content looks to be ok we will accept it as genuine.

There is real danger in trusting the information provided from these ‘Word Salad makers’ and we need to be aware that the more general the training data, the more likelihood of errors in the response.